The following is written in my Personal capacity, and does not represent the views of the SpiderMonkey team, Mozilla, TC39 or any other broader group than just what’s between my ears. I wear no hats here.

I want to propose a little thought experiment for the JS language. It’s pretty janky, there’s lots to quibble about, but I find myself coming back to this again and again and again.

tl;dr: We should add user-defined primitives to JS.

Motivation

Unless you’ve been in my brain (or suffered me talking about this in person), you’re probably wondering: What does that even mean?

Let’s take a step back. Working on a JS engine I get to watch the language evolve. JavaScript evolves by proposal, each going through a set of stages.

What I have seen over my time working on JS is new proposals coming from the community that want to add new primitives to the language (BigInt, Decimal, Records & Tuples).

New proposals want to add primitives for a variety of reasons:

- They want operator overloading

- They want identity-less objects

- They want a divorce from the object protocol

Of course, as soon as a proposal has this shape, it has to come to TC39, because this isn’t the kind of thing that can just ship as a library.

There’s a problem here though: Engine developers are reluctant to add new primitives: primitives have a high implementation cost and there's concern that this ends up adding branches to fast paths causing performance regressions for code that doesn’t ever interact with this feature. For those who think Decimal would be awesome, it seems an easy price to pay, but for those who will never use it, it would sort of suck for the internet to get just a -little- bit slower so that people could have less libraries and easier interoperability. Furthermore, each new primitive takes a type tag in our value representations... these are a limited resource, barring huge redesign of JS engines.

There’s another problem here: TC39 is required to gatekeep all these things. This makes the evolution and exploration of the solution space slow and fraught. Even more scary: What if TC39 gets the design wrong, and as a result we don’t see a feature actually getting used, or it is forever replaced by some better polyfill?

Some people both outside and inside the JS community make fun of the “Framework of the Week” -- the fast churn that happens in the JS community. The thing is, I think this is actually a fantastic thing! We want language communities to be able to explore other solutions, find trade-offs, discover novel solutions, etc. Forcing everything through the narrow waist of TC39 is sometimes a technical necessity, but it also deprives the community of one of its strengths.

So what do we do about it? User Defined Primitives: JavaScript authors should be able to write their own primitive types and ship them as libraries to JS engines. The manifesto should be something along the lines of “Why just Decimal? Why not Rational? Why not vec3? vec4? ImmutableSet?”

What does success look like?

The JS community gets to write hundreds of libraries exploring the whole space of primitives. We see these libraries evolve, fork and split, finding good points on the trade-off space.

Ideally, we’d even see some user-defined primitives become so popular, so intrinsic to the way that people write JS, that we decide to simply re-host those user-defined intrinsics right into the official JS spec.

Sure... but how?

So it is simple enough to have a rallying cry. The reason I’m writing this however is that, as an engine implementer, I have a sketch of how I think we could get this to work. I’ll come to some gaps shortly.

One thing that I keep in the back of my head about this work is: I think a successful design for this would provide a pathway for BigInt to be re-specified as a “Specification Defined Primitive”. Essentially, if you could do user-defined primitives in the past, before BigInt, then you could have simply had BigInt be a library, that perhaps later got some special syntactic sugar (n suffix).

A Strawman Design

So let’s build out a strawman design. My biggest point I want to make is that I think all the pieces are technically feasible, the challenges being agreeing on how to make this all look.

As a preamble: Proxy is a type that exposes to users the internal protocol of Objects from the specification (sometimes called the meta-object protocol). Proxies have traps so that users can customize what happens during the execution of this internal protocol.

What if we specified the operations on primitives in terms of a “primitive operation protocol”, and then specified user-defined-primitives as special kinds of data that have a user-implemented primitive operation protocol.

One important thing I tried to avoid was saying that these user-defined primitives are Objects. I actually think it’s important that they’re not Objects. That they exist in a totally different space than Objects. In my opinion this makes things like operator overloading much more manageable, as you would only ever allow operator overloading in terms of these Primitive objects. It also makes only Primitives pay the cost of operator overloading, as you already have to type check at each operator to choose the right semantics.

So what does this look like? Let’s start with a fairly gross version of this, where we set all the moving pieces out explicitly.

Suppose we had a Primitive constructor, that worked a lot like Proxy, in that it took a trap object. Unlike Proxy however, what this constructor returns is a function, which when invoked, returns primitives with the traps installed. For performance, traps should be resolved eagerly, and be immutable.

let traps = {

// We'll discuss the contents of this in a second.

}

let Vec3 = new Primitive(traps);

let aVec = Vec3(0, 0, 1);

let bVec = Vec3(0, 0, 1);

(aVec === bVec) // true -- no identity!

Off to a good start. Next, let’s start designing the trap set

Well, let’s start with the most basic: What do you do with the arguments to the construction function, and what’s the actual data model here?

To avoid having identity, we need to define the == and === operators to compare these elements somehow. This means we have to define a data-model for primitives. I’d propose the following:

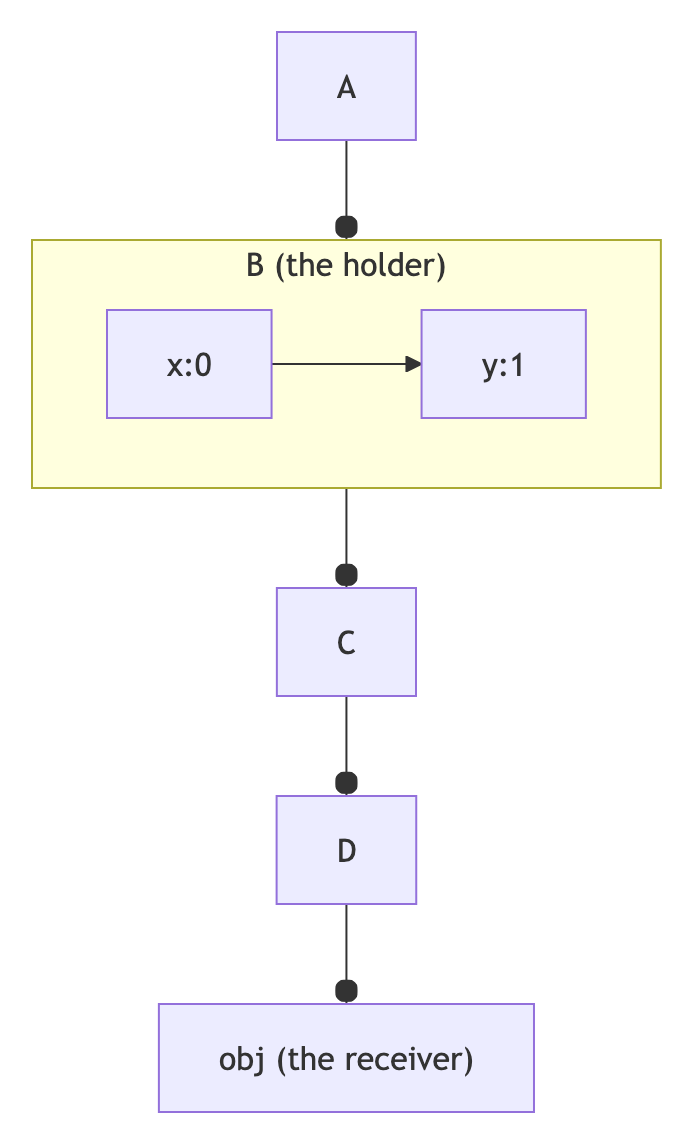

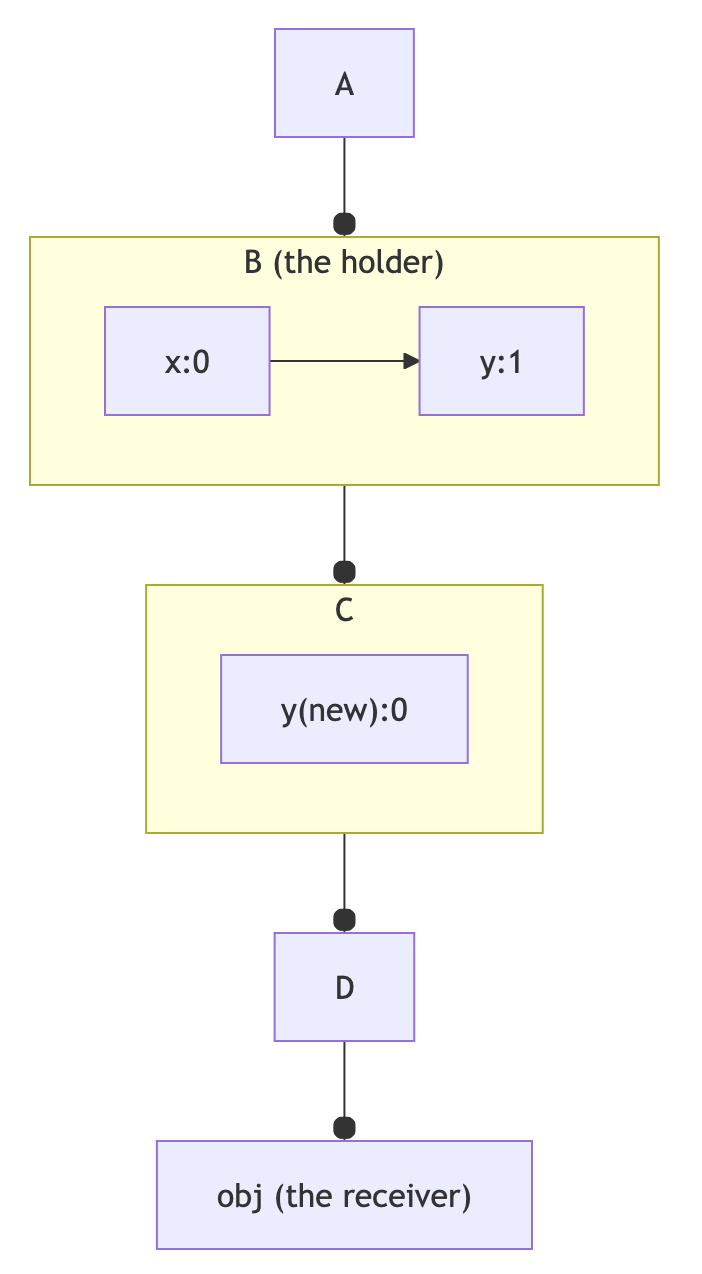

A Primitive is a collection of data with named ‘slots’ where primitives are compared by slot-wise comparison always

In a JS engine implementation a Primitive is a special type, with a single type tag.

So let’s extend our traps object to provide ‘construction’ mechanics.

let traps = {

constructor(primitive, x,y,z) {

// Design Question: Do we always provide getters for slots?

// Suppose for the purposes of this post, we do.

Primitive.setSlot(primitive, 'x', x);

Primitive.setSlot(primitive, 'y', y);

Primitive.setSlot(primitive, 'x', z);

}

}

Now a Vec3 has 3 slots to store data in, named x, y, z. In a JS engine, a Primitive right now consists of

- An internal slot which holds all the processed trap functions as well as the count and names of the slots. This would look and work a lot like a

Shape (SM) or Map (V8), concepts which already exist

- A number of slots which hold JS values.

So far in our story, the following should work:

const pos = Vec3(1, 2, 3)

const target = Vec3(100, 100, 0);

function distance(v1, v2) {

return Math.sqrt(

(v2.x - v1.x) * (v2.x - v1.x)

+ (v2.y - v1.y) * (v2.y - v1.y)

+ (v2.z - v1.z) * (v2.z - v1.z)

);

}

let distanceToTarget = distance(v1,target);

What else would we need? Well, here’s where we can add operator overloading. Of course, we do this by adding another trap:

add: (a, b) => {

// Design Question: What do you do with mixed types?

// It's tempting to just say throw, but it would

// foreclose on the possibilty of scaling a

// vector by N * Vec3(a, b, c);

return Vec3(a.x + b.x, a.y + b.y, a.z + b.z);

},

Write the rest of the definitions for common math and this should work:

let product = Vec3(1, 2, 4) * Vec3(1, 2, 3);

// Using distance-from-0,0,0 as a poor-man's .magnitude() function.

let mag = distance(product, Vec3(0, 0, 0));

let scale = Vec3(1 / mag, 1 / mag, 1 / mag)

let norm = product * scale;

Now this makes you program with free functions outside regular operators.

Of course, we could then define Object Wrappers for these user defined primitives if we wanted to, potentially allowing someone to write Vec3(1, 3, 4).magnitude()

Ok... it’s a sketch. Kinda thin.

Well, yes. I have hemmed and hawed about this idea for more than two years now. I kept thinking “Y’know, we have all the infrastructure to experiment with this, you could build a prototype”, and kept not finding the time.

I’m publishing this blog post for two reasons:

- I want to stop thinking about this and ‘close the open loop’ in my brain.

- I hope is sparks some conversation. I see this as a totally viable way for the JS community to get loads of things it wants, while getting engine developers and TC39 out of the way.

Ok... sounds good, what’s left to do?

This really is a sketch. There are so many design decisions left unsaid here:

Syntactically, what does a user-defined primitive look like? Should you only provide these to a new class like syntax sugar? (e.g. value Vec3 { add(){} })

How do you handle variable-length primitives? It would be amazing if you could make Records and Tuples work here, but both are arbitrarily sized. Maybe that’s fine?

recordTraps = {

construct(primtive, ...args) {

// The nice thing here is that it makes comparisons between records and typles

// of different lengths quick, because the "PrimtiveShape" you'd likely want

// would be a fast path

for ((k,v) of args) {

Primitive.setSlot(primitive, k, v);

}

}

}

Speaking of Tuples... do you allow slot indexes? In general? Opt in?

How do you handle realms? Primitives can be shipped freely between realms, but presumably the code which powers a primitive is stuck in one realm? This is actually being discussed in committee already to handle the same problem in Shared Structs.

Speaking of... Structs really overlap a lot with this. Too much? Maybe? Can we do anything to make this work with them nicely?

Similarly, Structs is trying to answer the question: How do you give these things methods? Do Primitives get object wrappers?

Do we allow extended primitives to store object references or do we mandate they don’t; or is this part of the primitive protocol?

JSON.stringify,toStringTag, etc. etc.

Structured Cloning.

Pushing this to completion would be a huge undertaking. Almost certainly years and years of standards work. Yet, I think it would be a fascinating and worthwhile undertaking.

Brian Goetz recently gave a talk called “Postcards from the Peak of Complexity”. He highlights how shipping too soon could have bad effects, but by waiting and working through issues you really could find a simple core. I think that simple core is in here.

If I were to tackle this, I’d approach the problem from two ends:

- I’d really start building the prototype for what these things look like. Get a playground up, let people get a feel for what works and what’s rough, and explore.

- At the same time however, I’d make a serious effort and using BigInt as a guide to how you would include these things in the specification -- coming back to my success criteria, I think if BigInt can be specified as a built-in Primitive type, you’ve really won here.

Why aren’t you presenting this at plenary?

I don’t think I have enough buy in for this idea to come to plenary, both within Mozilla, and without. So for now, let’s talk about this like it’s a blog post and not a real proposal. If it ever became a real proposal maybe I’d present.

Matt, you’re describing Value Types from Java.

Yes, thank you for noticing a heavy influence. Value types in Java have a fantastically pithy phrase which captures some of the power here: Codes like a class, works like an int.

I’ve been too far away from the Java ecosystem to really have strong opinions about them. I also think ultimately JS primitives would look different than Java Value types, if only because JS is a dynamically typed language, but I do think it’s a huge inspiration to this post.

Conclusion

I want to challenge the JS Community to think big about how the language could evolve to solve real challenges. I think this sketch provides a possible path forward to solve a whole bunch of problems in the language ecosystem. However, I’d be lying if I wasn’t scared of the long tail of complexity this would potentially entail.

Any background reading? Watching?

Java

JavaScript

Acknowledgements

- Thanks to Ben Visness for helping workshop out a Vec3 type, and reading drafts of this.

- Thanks to Iain Ireland, Eemeli Aro for providing draft feedback.

- Thanks to Steve Fink for catching post-publication errors :)

- Thanks to the SpiderMonkey team for letting me ramble about this stuff.

- Thanks to Nicolo Ribaudo and Tim Chevalier from Igalia for their prototyping work on Records and Tuples which inspired a lot of this thinking.

- Thanks to anyone who has listened to me about this!